Spotting faces in the crowd

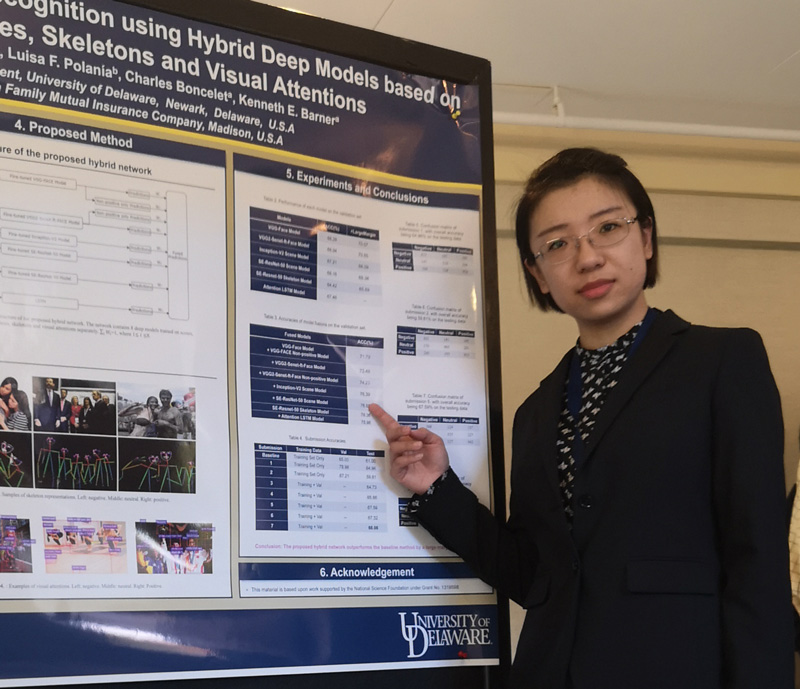

Photo by iStock and courtesy Xin (Cindy) Guo December 18, 2018

Team develops solution to determine emotions of people in group photographs

The saying goes that a picture is worth a thousand words. But what if you can’t tell what the picture shows? From awkward family photos to class photographs, sometimes it’s tricky to tell what the people in the pictures are thinking.

Using machine learning and deep learning with neural networks, a team from the University of Delaware is figuring that out. A team led by doctoral student Xin (Cindy) Guo scored first place in the Group-level Emotion Recognition sub-challenge, one of three sub-challenges in the 6th Emotion Recognition in the Wild (EmotiW 2018) Challenge. Winners were announced at the ACM International Conference on Multimodal Interaction 2018, which was held in October 2018.

Teams were given a set of images picturing a group of people and tasked with developing an algorithm that could classify the people in the photos as happy, neutral or negative. Teams had a month and a half and seven attempts to produce the most accurate algorithm possible. The UD team’s winning solution, titled “Group-Level Emotion Recognition using Hybrid Deep Models based on Faces, Scenes, Skeletons and Visual Attentions,” will be published by ACM. The group fused eight different models together to develop their winning solution, which works on photographs at a variety of resolutions, blurry to clear.

The goal of such work? To automatically classify images uploaded to websites.

“When people search, they would see the images they are looking for because the algorithm would run and label whether people are happy or not,” said Guo. “It could be used to analyze the emotions of a group of people pictured at a protest, a party, a wedding, or a meeting, for example. This technology could also be developed to determine what kind of event a given image shows.”

The team also included doctoral student Bin Zhu, alumnus Luisa F. Polania, professor Charles Boncelet, and Charles Black Evans Professor of Electrical and Computer Engineering and department chair Kenneth E. Barner. Barner has guided the work and is Guo’s advisor.

This work is supported by the National Science Foundation under Grant No. 1319598.

Contact Us

Have a UDaily story idea?

Contact us at ocm@udel.edu

Members of the press

Contact us at mediarelations@udel.edu or visit the Media Relations website