Making artificial intelligence trustworthy and ethical

Photos by Maria Errico December 17, 2025

Data Science Symposium explores collaborative development of AI

No one expects a keyboard, a hammer or a scalpel to have built-in ethical standards that guide its work. Only the users of such tools can chart those paths.

But artificial intelligence (AI) — the term given to the powerful computational tools that can capture enormous amounts of data, sort it out, learn from that data, apply it to problems, evaluate environments, make rapid decisions, create new imagery and written works, detect anomalies, solve problems and determine whether to accelerate or hit the brakes — is a whole ’nother tool.

It is, in fact, an amalgamation of data, tools, technologies, information and analytical processes, all connected in powerful and often undisclosed ways.

As its use expands and finds new applications around the world, the race to harness, steer and perhaps even master AI technology has been unfolding at breakneck speed. There is pervasive, consistent pressure to do more with it — as soon as possible.

At a recent symposium organized by the Data Science Institute at the University of Delaware, though, keynote speaker Katie Shilton made the case for a significant change in pace.

“We’re in an era of ‘hurry up and adopt,’” said Shilton, professor in the University of Maryland’s College of Information and Institute for Advanced Computer Studies. “But we have to figure out how to support students and how this challenges fundamental science. We don’t have to adopt fast.”

Shilton’s keynote was titled “No AI Without Us: Putting Co-Development at the Center of Ethical Data Science and AI.” Co-development and participatory AI — the intentional inclusion of diverse voices and collaborators during the development of AI systems — were central concepts discussed. Shilton’s Ethics and Values in Design Lab and the multi-university work of the Institute for Trustworthy AI in Law and Society (TRAILS), one of the National AI Institutes, are among the collaborative efforts working on these problems.

The public expects certain guardrails, Shilton said, as evidenced in the fallout from a 2014 study of “emotional contagion” triggered by posts and responses on Facebook. Internal Facebook data were analyzed without the knowledge or consent of those whose posts and responses were part of the study. Informed consent is the gold standard of responsible scientific study that includes humans, as the publisher of the article noted in an editorial expression of concern a month after the study was released.

Concern over the misuse of personal information and biometric data, the spread of misinformation, and inherent bias has added to a growing “techlash,” defined as public backlash against overreach by the technology industry.

“We are caught up in the techlash, too,” Shilton said.

Trust is a critical issue when AI is part of the equation, she said, pointing to an April 2025 report by the Pew Research Center that showed the public was notably less confident than experts that AI would ultimately be more beneficial than not. In a September 2025 report, Pew said Americans are more concerned than they are hopeful about the impact of artificial intelligence on society.

Collaborative efforts

To address this mistrust and build trustworthy, reliable systems, more transparency, co-development and auditing are required, Shilton said. It is a slower, sometimes uncomfortable process. Aligning the technology with human values such as justice, fairness and privacy is challenging, but recognizing and addressing bias requires many perspectives.

Ethical codes and related analytical tools are available and under development, Shilton said. She pointed out two such examples, including the Association for Computing Machinery’s (ACM) Code of Ethics and the American Civil Liberties Union’s Algorithmic Equity Toolkit, which aims to understand government technologies and their impact.

She also pointed to mapping processes that identify AI breakdowns and necessary repairs.

“Particularly for mission-driven organizations, this is a very worthwhile approach,” she said.

“We’re coming up with metrics of trustworthiness,” she said. “How far can these methods go? Can we build trust in a scalable way? We’re not quite there.”

Getting there requires a different pace.

“These methods will slow things down,” Shilton said. “But it’s worth it.”

Robust discussion

Many challenges related to the ethical development and use of AI were raised in a panel discussion that followed Shilton’s keynote address.

Moderated by UD’s Kathy McCoy, professor of computer and information sciences, the panel included Shilton, Matthew Holtman, executive director at JPMorgan Chase & Co.; Thomas Powers, associate professor of philosophy and director of UD’s Center for Science, Ethics and Public Policy; Nii Adjetey Tawiah, assistant professor of sociology at Delaware State University; and Jay Yonamine, advisor to EdgeRunner AI, which develops device-based generative AI assistants.

AI already has proven its potential for tremendous good. But there are important problems to address.

“AI is not an ethical thinker,” Delaware State’s Tawiah said. “But you are. You need to check. AI will hallucinate. It should supplement your work, not do your work for you.”

Yonamine reinforced these observations, noting that this is an area where academia needs to “carry the flag.”

The ruthless pursuit of the truth is a core area that research universities must do, he said, before adding that “universities need to hold themselves to a higher standard.”

There are certain tensions that arise when academia, industry and/or government entities collaborate. Research timelines often extend much farther than corporate deadlines and it can be uncomfortable when research points to problems within industry or government practices. Access to good data is another sticking point.

“Research is independent of data ownership,” Tawiah said. “And when you have two partners — a small one and a giant one — the giant is going to have control. I don’t know if industry will allow academics to have that power.”

The data-sharing problem was a hard challenge even before AI emerged, Shilton said.

“When we worked with Meta, they were very serious about allowing academics to study elections,” she said. “Yet the data sharing was very hard.”

Aiming for collaboration and the mutual benefit to researchers and industry is easy to accept and cheer, UD’s Powers said.

“The hard part comes when industry gets a message they don’t want to hear,” he said. “Having a temporary in-house researcher who gives you bad news — I worry there is not a lot of tolerance for those kinds of messages.”

Yonamine said he could see a world where it gets tighter, with everyone working in their own interests and “no one is asking what’s best for the consumer in the short-, medium- and long term?” Academic research is an important way to address some of that, he said.

Holtman said he sees many junior data scientists who are highly motivated to deal with ethical issues.

“They believe in it and really want to contribute,” he said. “Maybe we need to find ways to incentivize that.”

On the other hand, Shilton noted that while students are taught ethics from the “get-go,” many go into the workforce and face a boss that says, ‘No. Build it.’”

Where do you draw the ethical boundaries?

“I like to think ethics occupies the space between public opinion and the law,” Powers said. “In some ways, this can be predictive of law or changes in law by policy. Ethical reasoning is accessible to everyone who takes the time to read and think. It’s not written down in law the way this question — does this violate copyright or not? — is. That’s not true in ethics. It’s hard. The outcomes are often uncertain. In a university setting, that skill is practiced or at least identified.”

The public expects researchers to hold themselves to high ethical standards, Shilton said.

“But finding out how to guide it?” she said. “When trying to figure out AI guidelines, data ethics questions almost always wind up with ‘it depends.’ Where did you get the dataset? How do you plan to use it?”

Do users need to have the full recipe behind an AI menu? Do they need to know all the ingredients, how they were sourced and selected? Are ethics baked into every dish or offered à la carte? Who monitors all of that?

The questions seem endless. And that’s an important reason to have many perspectives in the development of AI systems.

Diverse teams bring broader knowledge and experience and that helps to keep teams accountable, Shilton said.

Diverse perspectives

The symposium drew participants from diverse fields.

Laurie Christianson, a computational chemist and data scientist, said she was glad she attended.

“I look at large datasets of chemistry and biological screening data, analyze it and use it to come up with better product concepts and research decisions,” said Christianson, Associate Global R&D Fellow with FMC, an agricultural sciences firm. “It’s interesting to see so much focus on ethical considerations. Concerns are clearly coming to the forefront. These are very powerful tools. It feels hopeful that people are focused more on these elements rather than just data, data, data and push, push, push.”

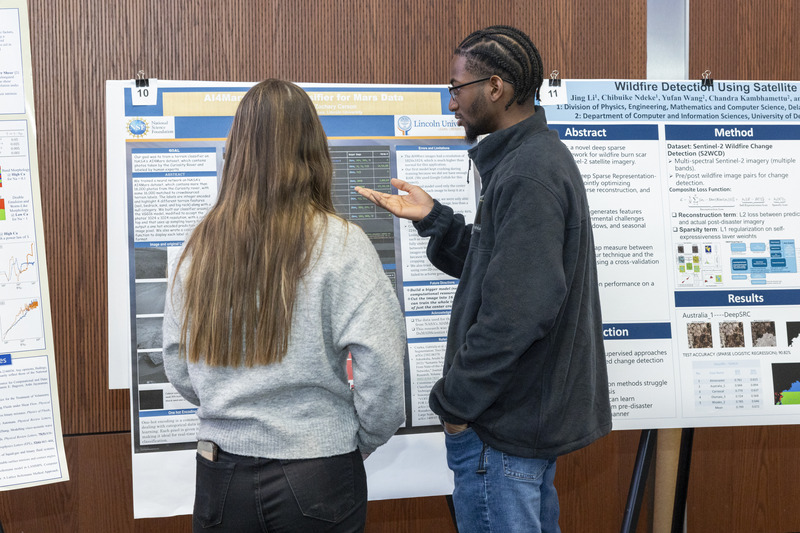

Abdourahim Sylla, a junior computer science major at Lincoln University, was among those who submitted posters for the event. He loves to explore data science and the potential benefits AI brings to so many aspects of research.

“AI is a tool for better development, to make processes faster,” he said. “At the end of the day, though, you still have to oversee it.”

Sylla said he uses AI on a daily basis.

“It’s like a big library of all these things,” he said. “Most of what’s known is already stored in there. We forget. AI doesn’t forget. Right now, it’s kind of new. There’s a lot of skepticism around it. But at the end of the day, if companies — and this is a big ‘if’ — are not too greedy about it, AI will be a big part of how we connect everything together.”

AI can be disruptive, said Amy Slocum, director of Delaware’s Established Program to Stimulate Competitive Research (DE-EPSCoR), a federal-state partnership designed to build collaborations to expand Delaware’s research and education infrastructure.

“It can be scary like any change that disrupts what we are used to doing,” Slocum said. “AI seems to be here to stay, and we must teach the next generation the skills to verify the information and embrace the changes to come.”

Awards for open data impact and student teams

The symposium highlighted UD’s growing strengths in data-driven collaboration through the Open Data Impact Award and Hen Street Hacks Award.

The Open Data Impact Award, launched by the UD Library, Museums and Press with support from DSI and DE-EPSCoR, honors research teams whose open datasets advance research, teaching and public engagement. This year’s awardees, chosen from over 20 submissions, represent diverse fields including political science and international relations, geography and spatial sciences, and linguistics and cognitive science.

The Hen Street Hacks competition featured student teams tackling real-world challenges using datasets from industry partners like Best Egg and Bank of America, showcasing how UD talent is driving innovation in AI and data science.

Contact Us

Have a UDaily story idea?

Contact us at ocm@udel.edu

Members of the press

Contact us at mediarelations@udel.edu or visit the Media Relations website