A sea of data

Speaker addresses need to derive meaningful information from raw data

11:38 a.m., April 8, 2016--On April 3, 2016, news media outlets across the globe published articles about 11.5 million leaked documents from a Panama law firm involved in money laundering.

Although 11.5 million is a large number, most readers probably had no idea what went into drawing meaningful conclusions from that huge cache of documents.

People Stories

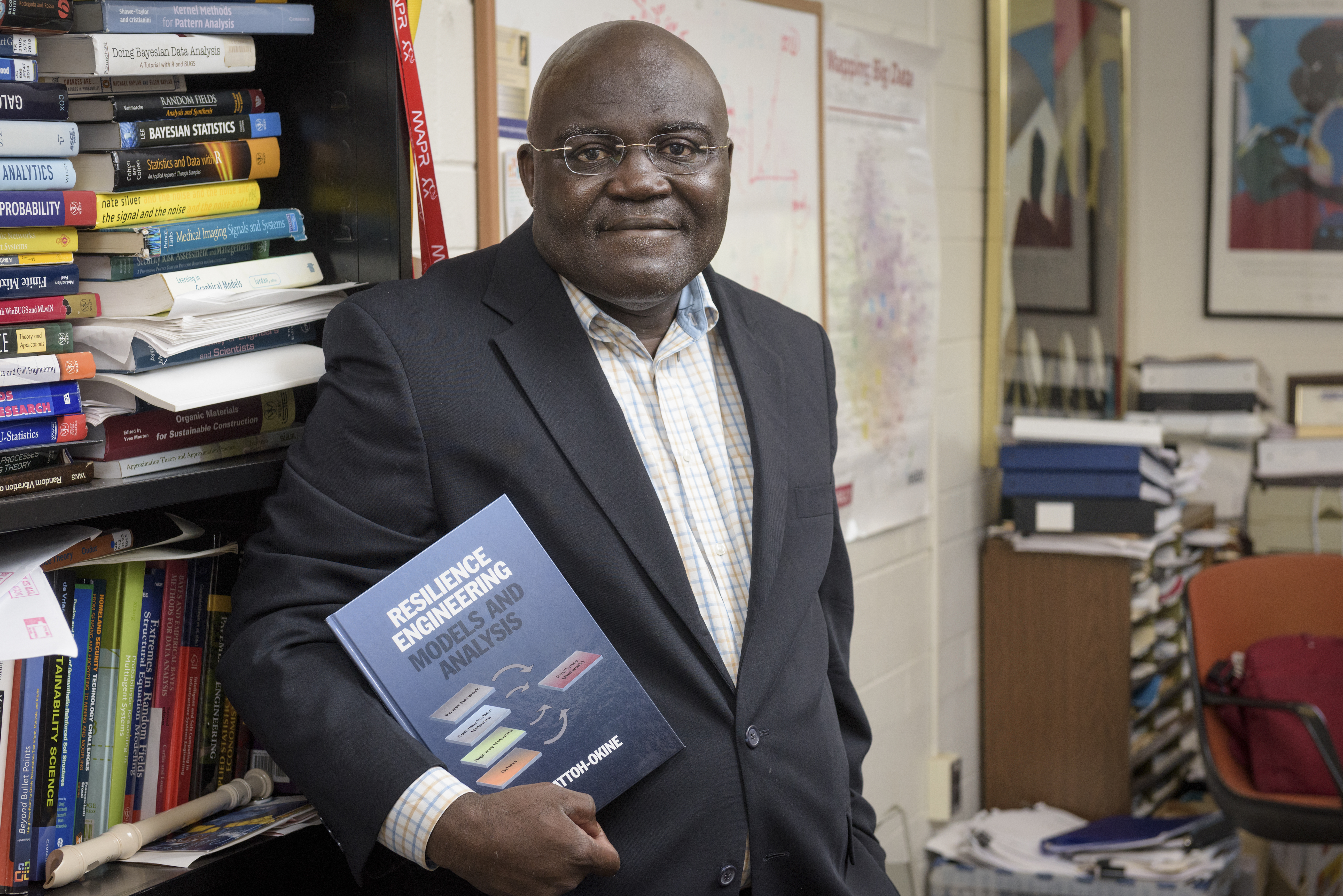

'Resilience Engineering'

Reviresco June run

In fact, it took some 400 journalists at more than 100 news organizations an entire year to peruse the 2.6 terabytes of data in those documents and piece together the story of a company that helped the world’s wealthiest people set up offshore bank accounts.

In a lecture hosted by the University of Delaware Cybersecurity Initiative on Wednesday, April 6, computer scientist James Nolan used the Panama Papers as an example of the need for new machine learning techniques to address the problems associated with living in a data-rich, information-poor world.

“Why can’t we put that 2.6 terabytes through an algorithm and spit out relationships in a few hours?” he asked.

Nolan emphasized the distinction between raw data — which is collected from cameras, phones, sensors, satellites, written documents, cyber-logs, and other sources — and information, which is the knowledge gained from studying data and teasing out relationships, resolving ambiguities, understanding scenes, and labeling events.

In the game of “data wrangling,” humans currently hold most of the cards, but Nolan shared insights into some strategies under development that could enable machines to quickly do the work that currently takes people weeks, months, and even years to complete.

In some cases, it’s as straightforward as routing data to the right place. As an example, Nolan explained that with video, the source drives the type of algorithm that will work.

“It would be great if a machine could identify the source of the video — say, from an unmanned aerial system versus a surveillance camera — and then automatically route the captured scenes to the correct class of algorithm,” he said.

In other cases, the challenge is to teach a non-human how to deal with context — for example, if you search for the word “bomb” on Twitter, you’re going to get all kinds of references for “bomb” that have nothing to do with explosive devices. The goal here is to teach a machine how to filter out occurrences that aren’t relevant.

Techniques being developed and refined include statistical topic modeling, which identifies the topics being addressed in a corpus of text and then enables experts to drill down to find what they’re looking for, and semantic role labeling, which allows relationships to be extracted from data.

In statistical topic modeling, words are merely tokens, so the specific language doesn’t matter. However, semantic role modeling, where the sentence is the basic unit of communication, is highly language dependent.

Nolan concluded by sharing several examples of projects currently underway, for clients ranging from the military to financial institutions, at Decisive Analytics Corporation, where he is the vice president for analytic technologies.

Article by Diane Kukich

Photo by Evan Krape